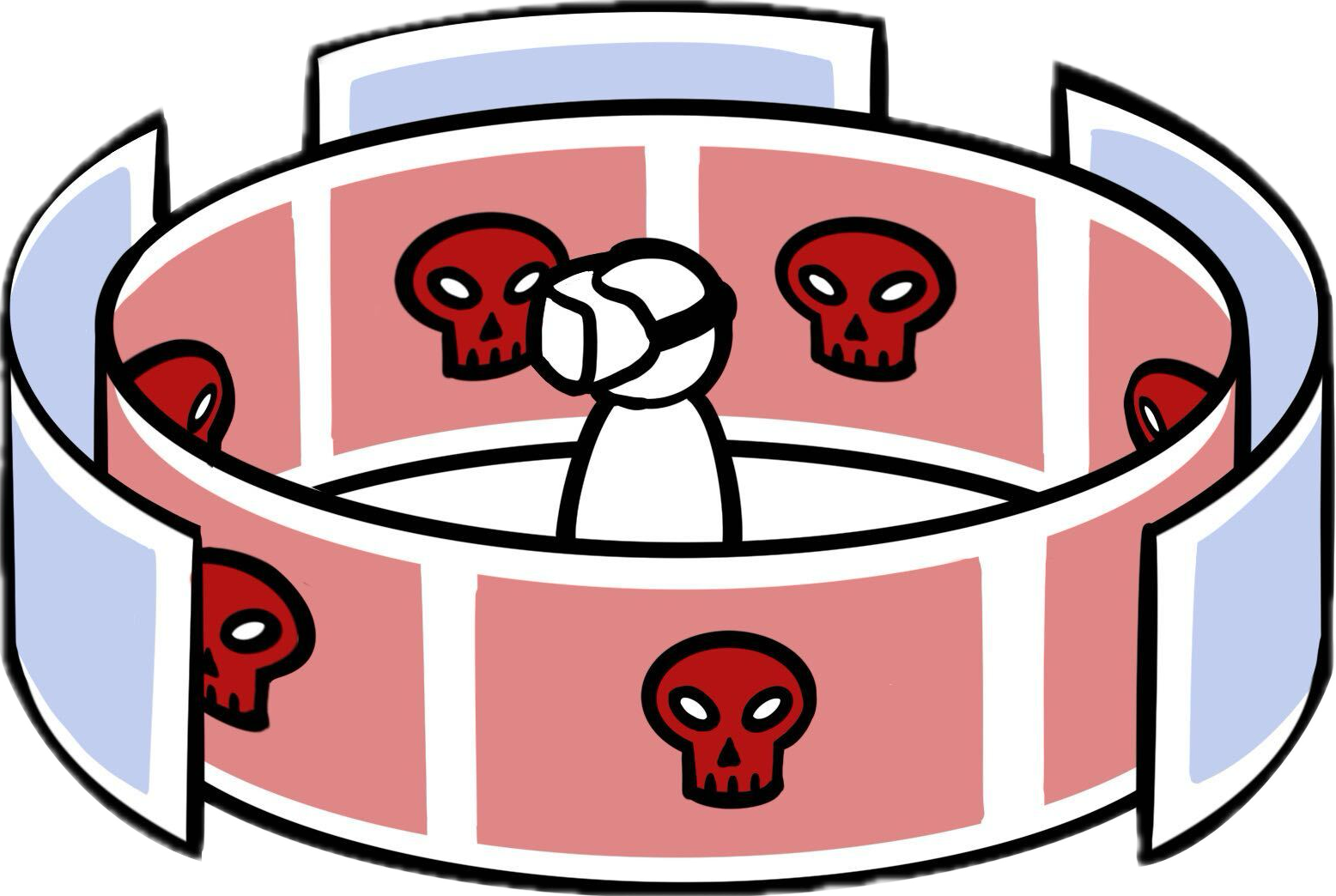

Implemented on ARKit - Exploited Same Space Property

When the victim launches the application, an advertising platform places a third-party ad in a certain bounded region of the main app, and a revenue-hungry developer/attacker then places a new interactive bait object in the same space as the advertisement. This component displays the message ``click here to win your free cookie" to bait user clicks. However, the user's interaction with the bait object actually triggers the underlying ad as the attacker 'steals' revenue from the click.

Abstract

Augmented reality (AR) experiences place users inside the user interface (UI), where they can see and interact with three-dimensional virtual content. This paper explores UI security for AR platforms, for which we identify three UI security-related properties: Same Space (how does the platform handle virtual content placed at the same coordinates?), Invisibility (how does the platform handle invisible virtual content?), and Synthetic Input (how does the platform handle simulated user input?). We demonstrate the security implications of different instantiations of these properties through five proof-of-concept attacks between distrusting AR application components. We then empirically investigate these UI security properties on five current AR platforms: ARCore (Google), ARKit (Apple), Hololens (Microsoft), Oculus (Meta), and WebXR (browser).

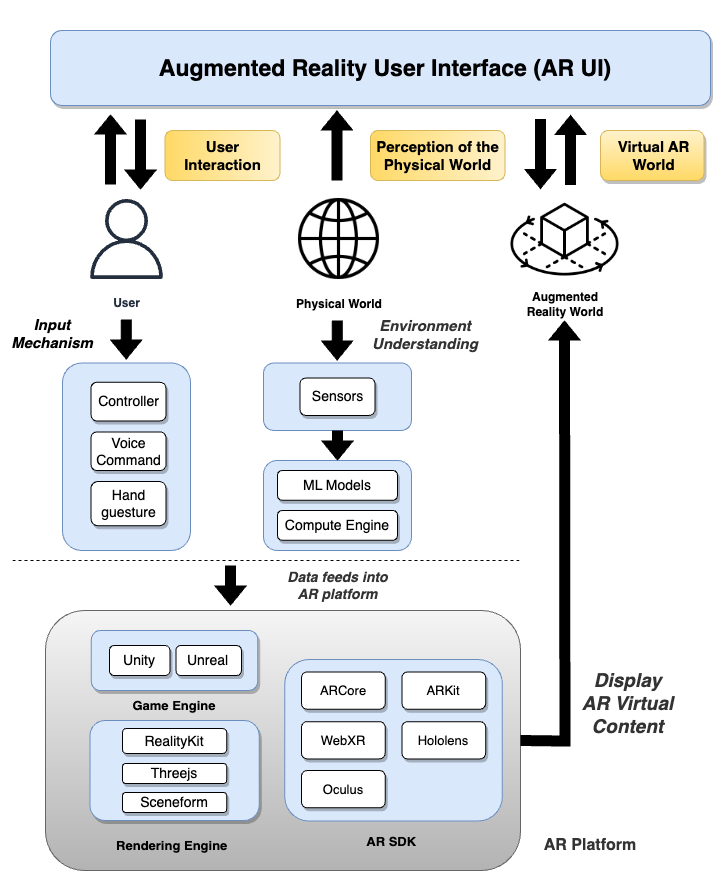

User Interface in Augmented Reality

Unlike traditional 2D contexts where users interact with UI content on flat screens, the AR UI is a conjunction of visual elements from the physical world, the AR virtual world, and the user's interactions within the immersive 3D world. The AR context requires that the system process and understand the physical world surrounding the user, and virtual content is often anchored to physical-world surfaces, requiring rapid updates in response to change's in the user's physical surroundings or position.

AR UI Properties

In this paper, we defined three UI security-related properties for AR platforms: .

Same Space: How do AR systems manage objects that share the same physical world mapping? For instance, when two AR objects with identical shapes and sizes are anchored at the same 3D coordinates, which object(s) become visible to the user? Which receive (s) the user's input?

Invisibility: How do AR systems handle virtual objects in the AR world that are transparent? To what extent, if any, does an object's visibility influence its functionality? For example, are transparent objects capable of receiving user input? What happens when a transparent object renders over another virtual object?

Synthetic Input: How do AR systems handle synthetic user input? For example, can adversarial code generate synthetic input to mimic human interaction, such as via a programmatically generated raycast?

Attacks

We demonstrate the security implications of different instantiations of these properties through five proof-of-concept attacks between distrusting AR application components.

Clickjacking Attack

Denial-of-Service Attack

Implemented on Hololens - Exploited Invisibility Property

The victim/user launches the application and intends to select an AR object using a hand ray as the input mechanism. However, the attacker can either overlay an invisible object on the targeted AR object to block user from interacting with it. Furthermore, the attacker can overlay a large invisible object that encapsulates the user such that the user is always physically inside of the object. The user's interaction towards any AR object is thus blocked regardless of their movements within the physical space.

Input Forgery Attack

Implemented on ARCore - Exploited Synthetic Input Property

When the victim/user launches the application, the malicious application developer will place the third-party ad in the user's view. The attacker will generate synthetic input to trigger interactions on the ads, increasing its interaction count, and later charging the respective advertisers for this inflated number of ad views.

Object-in-the-middle Attack

Implemented on Oculus - Exploited Invisibility Property and Synthetic Input Property

When the victim/user launches the application, it shows a mock authentication interface, part of the main app that consists of a pin pad for the user to enter their password. We present a logger to illustrate the efficacy of the attack. A malicious third-party component places several transparent meshes in front of the pin pad entity to intercept the user's input. Once the transparent meshes detect the user's interaction, the malicious component casts a synthetic input to the pin pad, passing the user's input on to its intended destination

Object Erasure Attack

Implemented on WebXR - Exploited Invisibility Property

When the user launches the application, it presents two competing third-party ad libraries. Code from one mock ad library then places a transparent mesh in the same space as the competing advertisement to erase it.

BibTeX

@article{chenguser,

title={When the User Is Inside the User Interface: An Empirical Study of UI Security Properties in Augmented Reality},

author={Cheng, Kaiming and Bhattacharya, Arkaprabha and Lin, Michelle and Lee, Jaewook and Kumar, Aroosh and Tian, Jeffery F and Kohno, Tadayoshi and Roesner, Franziska}

}

Template from Nerfies

When the User Is Inside the User Interface:

When the User Is Inside the User Interface: